|

Hanyang University claimed on the 21st that a team of Professor Kim Sang-wook (School of Computer Science) recently developed 'LENA', a learning rate adjustment technology for large-scale deep learning model learning.

LENA successfully completed model learning with less than a half of the learning time compared to the existing technology and also seemed it successfully complete model learning in a large-scale learning situation where the previous technology failed.

Deep learning is a technology that learns big data using a neural network model composed of a lot of layers and is a core technology for the Fourth Industrial Revolution. However, deep learning requires a vast time and computing resources for big data learning, which has been pointed out as a big obstacle to deep learning research and development. To solve this drawback, academia and industries are actively conducting research about data parallelism to accelerate deep learning.

In data parallelism learning, how much learning results of workers are going to be applied to a model is called 'learning rate' and a higher learning rate means the learning results are more reflected in the model.

Generally as learning proceeds, as the scale of learning (the amount of data learned simultaneously), there is a tendency for the learning result's quality to get better. Hence, in this case, people set the learning rate high to reflect a good-quality learning result on the model.

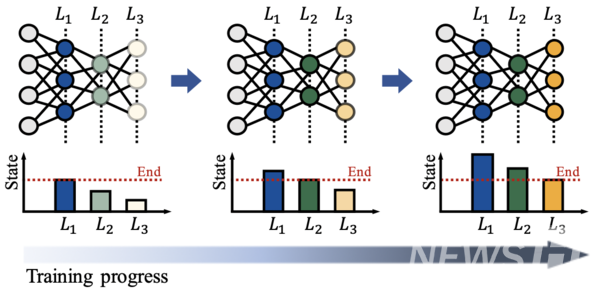

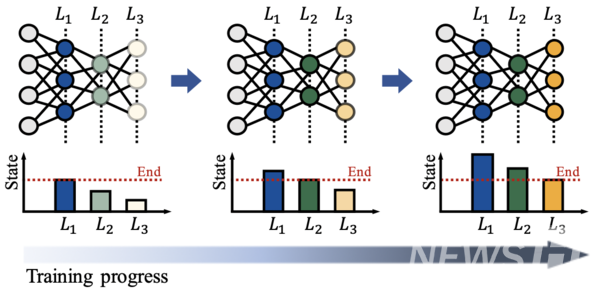

There are numerous layers in a deep learning model, and each layer has its own unique role. Professor Kim's team had a hypothesis based on the understanding of the role difference by layers and thought 'the optimal learning rate by each layer will be different' and through thorough analyses, they confirmed the learning status is significantly different by layers.

With these analyses, Professor Kim's team figured out that the existing learning rate adjustment techniques were applying the same learning rate to all layers without considering the learning status difference of each layer and also figured out that this caused the decrease in model accuracy in data parallelism learning.

Based on this, the team developed 'LENA', a learning rate differentiation and adjustment technology depending on learning progress status by model. LENA apprehends the learning progress status of a model layer by layer and based on that, differentiates the learning rate of each layer and decides. Adding to that, the team also suggested a 'learning rate warm-up strategy' to ease the learning instability problems that might occur in the beginning steps of large-scale data parallelism learning.

As a result, LENA rapidly reached the same model accuracy with only 45% of the learning time of the previous learning rate adjustment methods and scored a high model accuracy rate in large-scale learning situations where previous learning rate adjustment methods failed at. LENA is applicable to not only the existing deep learning technology but also to the future technologies to be developed and thus it is evaluated as a technology with high potential to be used in various areas of the AI field.

This research was funded by the National Research Fund (NRF) and the Institute for Information & communication Technology Planning & evaluation (IITP) and was conducted by Dr. Go Woon-yong of Hanyang University and Professor Lee Dong-won of the University of Pennsylvania.

Meanwhile, LENA will be presented at 'The ACM Web Conference 2022 (TheWebConf2022)' by being recognized for its uniqueness and excellence. TheWebConf is one of the best conferences in the world-known data science field.

|

NewsFaculty

NewsFaculty